Long-Running Coding Agents Raise the Bar on Builder Literacy

When Coding Agents Run for Weeks, Software Stops Being Prompt-Driven and Becomes Systems-Thinking-Driven Engineering Work.

Yesterday, I asked a simple question: what does 2026 hold for AI-enabled software development, now that coding agents can run long-horizon tasks in parallel?

At least to some extent.

Just in passing, in another article:

I am not good at predictions, so I turned the tables back to the reader.

Your guess is as good as mine now that coding agents can run long-horizon tasks in parallel.

But something important has shifted beneath the surface, and two recent pieces make that shift impossible to ignore.

One comes from Anthropic, describing how they keep long-running agents coherent across context resets.

The other one, just two days ago, comes from Cursor, describing how they coordinate swarms of agents working in parallel on the same codebase for days and weeks at a time.

At first glance, these look like two different problems.

One is about continuity.

Agents still face challenges working across many context windows. We looked to human engineers for inspiration in creating a more effective harness for long-running agents.

The other is about concurrency.

We’ve been experimenting with running coding agents autonomously for weeks.

Our goal is to understand how far we can push the frontier of agentic coding for projects that typically take human teams months to complete.

They are.

But they are also symptoms of the same underlying pressure.

Agentic coding is no longer about generating code.

It is about keeping intent intact as the build process spans time, tools, environments, files, and multiple autonomous actors infused with LLMs.

Once agents can work for days or weeks, the bottleneck is no longer model intelligence.

It is coordination.

And coordination is a systems problem.

Not a prompt problem.

No amount of killer prompts can streamline coordination across context windows spanning coding agents handling various aspects of a build.

On the outlook, Anthropic’s contribution might be misread as an “agent trick,” I know because I did, until I read the latest one from Cursor.

It is not.

What the tram at Anthropic actually shows is that long-running work collapses unless intent is made durable.

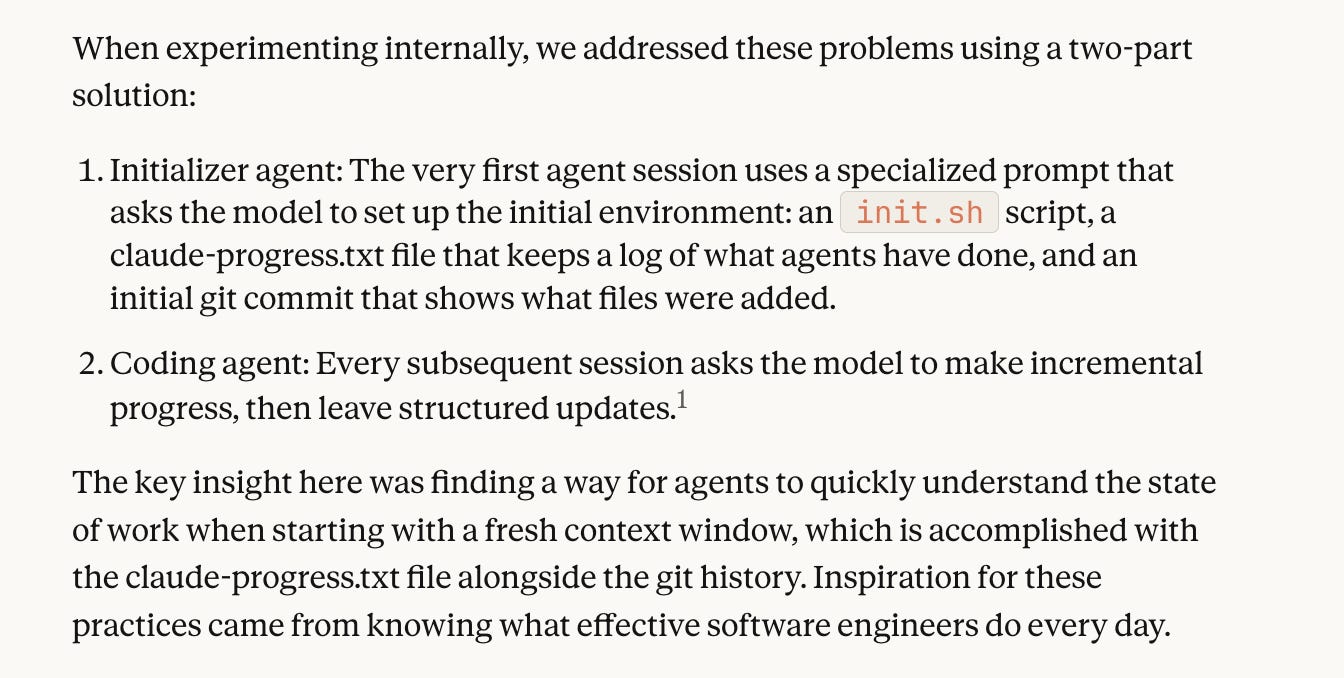

Their initializer and coding roles are not special agents in the magical sense. They are more like disciplined ways of re-anchoring context using artifacts that survive memory loss: progress logs, feature lists, tests, and commit history.

The bet is simple.

If context cannot be trusted, structure must be.

The team at Cursor approaches the same cliff from the opposite side.

Their concern is not memory loss across sessions, but breakdowns that happen when too many agents operate at the same level.

A swarm of agents slows or stalls each other.

Agents block each other.

Risk aversion creeps in.

Work fragments.

So they introduce hierarchy.

Planners decide what should happen.

Workers execute narrowly scoped tasks.

A judge agent decides whether the system should continue or reset.

Different mechanisms.

Both expose the same coordination limit.

Once agents run long enough and touch enough of the codebase, prompt cleverness stops being the deciding factor. What determines whether progress holds or collapses is how the work is structured, logged, reviewed, and handed off between agents.

The distinction between continuity and concurrency is most evident in “sustaining coherent work over long horizons”, which “is a foundational capability on the path towards more general, reliable” AI-infused software creation platform and tools.

Anthropic is solving continuity across time.

How does a system prevent drift when work spans many sessions, each with partial memory?

Cursor is solving concurrency across space.

How does a system prevent collapse when hundreds of coding agents touch the same evolving artifact?

But both are converging on the same answer.

You do not scale agentic coding by making agents smarter.

You scale it by constraining them.

By defining roles.

By externalizing state.

By forcing clarity into artifacts that coding agents must respect.

In other words, long-running agentic coding is quietly turning into systems engineering.

Engineering rigor takes center stage.

This matters because it exposes the damaging misconception spreading about the new craft of building software with AI, particularly in Vibe Coding.

The misconception is that longer-running agents can handle everything if you clearly outline your idea for your software product-to-be in a “killer prompt” that captures it perfectly from the outset.

But that could not be further from the truth.

The team at OpenAI perfectly captures the hidden fact:

As Codex becomes more capable of long-running tasks, it is increasingly important for developers to review the agent’s work before making changes or deploying to production.

If you give coding agents a clear goal and bounded tools, they will grind relentlessly.

They will make progress.

They will also scale your mistakes with equal efficiency.

Architectural ambiguity does not slow them down.

It accelerates failure.

And such ambiguity stems mostly from a faulty logical characterisation or a misunderstanding of the problem you are trying to solve in your build.

This is why architectural awareness becomes non-negotiable as agents grow more capable, so that you, as the builder, hold the mental model of the product being built.

Without a shared picture of your build-to-be with the LLMs powering the coding agents of your choice of AI-assisted coding tools, agentic coding features do not “figure it out” on their own.

Yes, they are perfect at generating code, but they are nowhere near understanding how software products are architected end to end, with every nuance that gets dropped into their loop.

They simulate generic solutions drawn from prior patterns.

That is more likely why fast iterations are mistaken for the correct direction.

Code gets generated, files change, commits pile up — but the build drifts because there is no shared picture of the product guiding those changes.

There is a huge number of artifacts to parse through, with no idea how to decode the logs the coding agents generated on the fly at every stage of the build process.

This is not a future wave of a problem that will hit those building with AI in the near future. It is already visible in how long-running agent workflows behave today, from the end of 2025 onwards.

Progress is now logged, staged, and inspected.

Planning surfaces appear before execution.

Agents explain what they will do before doing it.

Work is broken into inspectable chunks.

Failure becomes observable instead of silent.

In the article below, I have gone through how the models crystallize in the latest AI-infused software creation platform and tools, so I recommend that you check it out if you have the energy to dive into a 3000-word+ write-up on How Are the Latest Model Improvements Crystallising Inside CodeGen Platforms and AI-Assisted Coding Tools.

For builders, especially non-technical ones drawn in by the promise of Vibe Coding, this is the real shift to internalize.

Everyone may be a programmer now.

Not everyone is a builder.

A shift that is being streamlined in real time.

Chris Gregori, in his recent post “Code Is Cheap Now. Software Isn’t,” perfectly captures the shift:

There is a useful framing for this shift: AI has effectively removed engineering leverage as a primary differentiator. When any developer can use an LLM to build and deploy a complex feature in a fraction of the time it used to take, the ability to write code is no longer the competitive advantage it once was. It is no longer enough to just be a “builder.”

Instead, success now hinges on factors that are much harder to automate.

Taste, timing, and deep, intuitive understanding of your audience matter more than ever.

You can generate a product in a weekend, but that is worthless if you are building the wrong thing or launching it to a room full of people who aren’t listening.

Builder literacy in 2026 will not be about writing syntax.

We are well past that.

It will be about holding architectural intent steady while agents operate across contexts you no longer fully inhabit.

Speak Dev, so you can name what exists.

Think in scaffolds so you can see how the various building blocks that make up modern software products fit together.

Agents do not run on vibes.

They need structure.

And the responsibility for that structure does not belong to the model.

It belongs to you.

Software creation has long shifted from writing code to shaping the underlying systems that make up software products, with clarity about how everything comes together before the first line of code is generated by the coding agents.

In the words of Chris Gregori:

“Tools have changed [but] the fundamentals of good engineering have not.”

Spot on about the constraint paradox. The Anthropic/Cursor convergence you point out is especially telling because both hit the same wall from opposite directions (continuty vs concurrency). The idea that "agents scale mistakes with equal efficiency" cuts through the hype perfectly. I've seen thsi with multi-day runs where architectural ambiguity compounds silently until logs become archeological artifacts no one can decode. Systems thinking isn't optional anymore, its the bottleneck.