Yes, Models Advance. Platforms Verticalize. But Fundamentals Still Decide Who Thrives.

Why the Vibe Coding Training Wheels Protocol matters more than any new AI tool.

TL;DR

Consider this a letter to the Peter Parkers of Vibe Coding, the ones chasing new features from every CodeGen platform and AI-assisted coding tool faster than they can understand what those features replace.

As models keep advancing and platforms continue adding new abstractions through vertical integration, building with AI almost feels effortless. Yet every release still needs a human in the loop — human reasoning, judgment, and taste remain more vital than ever.

Launch after launch, it feels like everything’s changing. Yet the real shift isn’t in who writes the code; it’s in how much more of the structure gets abstracted away with each upgrade — leaving us, the humans, to stay oriented: defining purpose, shaping architecture, exercising judgment, weighing trade-offs, and maintaining the mental model that keeps the system coherent.

One thing is certain: the way we build software has changed for good. Without maintaining architectural awareness of what’s being abstracted away, you can’t build software that preserves intent while keeping its architecture sound as it grows.

Almost every couple of weeks, a new generation of AI coding platforms and tools emerges, promising to change everything.

That rhythm of launch after launch has been the new normal since late 2023.

In line with that acceleration, Anthropic’s Dario Amodei even said that most coding could soon be done by AI (that was seven months ago) and that developers might be replaced within months, considering the rapid progress in the models powering these tools.

One thing is certain: whichever side you take on the debate about whether AI will or will not replace traditional developers, the process of building software has changed forever.

Across the spectrum, from AI coding tools like GitHub Copilot, Cursor, Windsurf, Kiro, Augment Code, and Warp, to CodeGen platforms like Lovable, Bolt, v0, and Replit (and yes, it’s worth repeating that Replit is far more than a simple CodeGen platform), we’ve seen launch after launch, each promising another revolution.

The trend cycle keeps spinning.

Claude Code, Codex, and Warp have led the buzz in recent months, while Lovable and Bolt still hold the spotlight in the CodeGen category alongside Replit. New entrants like Base44 and Leap have also joined this wave.

Despite their differences, one thing is common across all these platforms and tools:

On one side, AI influencers warn, “If you’re not using these platforms or tools, you’re falling behind.”

On the other hand, the teams behind the platforms and tools promise to eliminate friction: “no more error loops,” “all-in-one backends,” “seamless automations and integrations.” Each launch feels like a mini-revolution until the newness fades.

On launch day, they feel like revolutions. Just the other day, the launch of Atlas by OpenAI sparked another wave of AI influencer videos and shorts. 'Chrome is dead' is the talk of the influencer community.

Don’t get me wrong, the developers behind the CodeGen platforms and AI-assisted coding tools have created impressive work.

But if you’ve actually tried using AI to build a real product, after a few days, they all start to feel the same. The initial excitement fades, replaced by the familiar frustrations of working with AI, which is becoming the new normal, especially if you are not a developer.

The hype that inflated your excitement fades as the friction of building you thought the AI had handled suddenly reappears. You switch to another model, then another, each promising smoother runs, yet the same underlying problems always return.

Look closely and you’ll see it. What’s really happening isn’t new intelligence — it’s new abstraction. Most platforms and tools run on the same handful of foundation models from OpenAI, Anthropic, and Google, just wrapped and repackaged in different ways.

The truth is, every CodeGen platform and AI-assisted coding tool is riding on the same wave: the ongoing advances in model capability — longer memory, fewer hallucinations, better reasoning, and improved tool use. It’s the models doing the heavy lifting, not the wrappers.

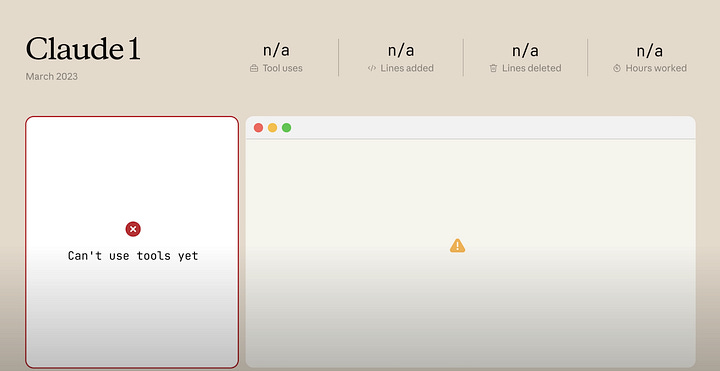

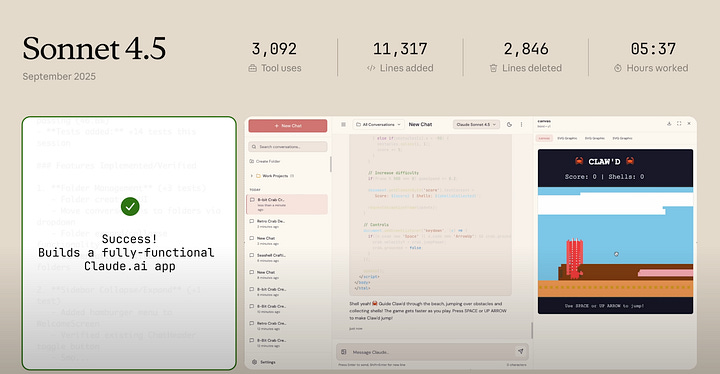

Over two years, Anthropic’s Claude 1 to 4.5 evolved from a static text generator into a reasoning system that can write, repair, test, and validate code across multi-step runs.

That’s not just a user experience upgrade or a polished interface; it’s a genuine leap in understanding.

Claude 1 couldn’t use tools. Claude 4.5 orchestrates them. (The price per token has also increased, but that’s a tale for another day, though)

The reality is that the reasoning capabilities of foundation models are improving, while the platforms built on top are becoming more convenient — some through vertical integration, others by embedding established software development paradigms, such as spec-driven planning, directly into the workflow.

Keep reading with a 7-day free trial

Subscribe to Vibe Coding to keep reading this post and get 7 days of free access to the full post archives.